Game

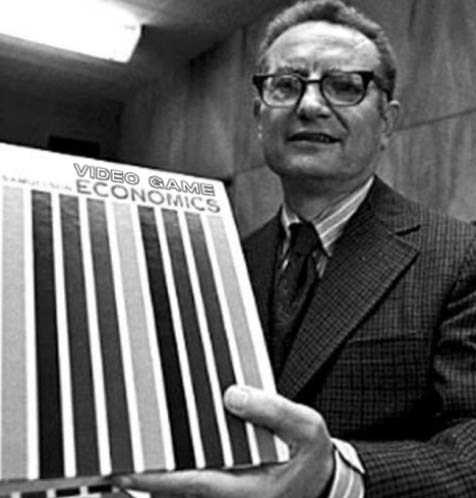

Economist

Consulting

ECONOMISTS SHAPE SUCCESSFUL COMPANIES.

THEY CAME FOR GOOGLE, UBER & AMAZON.

NOW THEY’RE COMING FOR GAMES.

SERVICES

Economic Design & Counsel

Economic State-of-the-Union

Review live game and token data and constraints. Form an assessment of the current trajectory strengths and weaknesses. Periodically meet, and discuss findings. Decide on actionable responses.

Analytics & Product Support

Design telemetry and reporting across SQL, Tableau, and RShiny. Set up and run experiments. Product support for UI/UX, LiveOps tooling, and roadmaps.

Discovery Sprint

Spend 32 hours together to identify opportunities and risks. A low-commitment way to get started fast.

One-Shot

Work together to define a set of deliverables and a fixed set of hours.

Retainer

Periodically meet and deliver against agreed-upon deliverables.

Blockchain / Crypto

Work together to build sustainable and profitable game economies and tokens.

HD / Mobile

Cosmetics, battle pass, systems design, IAP, and store optimization.

Other

Gamification across theme parks, social media, and tech apps.